The Effects of User Comments on Science News Engagement

May 4th, 2021

By Spencer Williams

Why Study Comments on Science News?

When you see a news article posted to Reddit (or Twitter, or Facebook, or Instagram...), what's the first thing you do? Do you read the article in full like a responsible consumer of digital media? If you're anything like me, you click the title, read a few of the top comments, get the gist of people's reactions, and maybe read the article if you decide it's worth your time. The question is, how do we make that decision? How do people decide whether to continue reading an article based on the types of comments they see?

That's what we set out to determine in this project. In particular, we chose to focus on the effects of user comments on science news. We chose science news in particular for three main reasons:

- Scientific information is increasingly being communicated through social media like Reddit, Twitter, and Facebook. It is important to understand how the features of those platforms (like comments, in this case) can affect people's understanding of that information.

- Science communication is challenging, and online discussions about science (e.g. on the subreddit r/science) can feel overwhelming to newcomers due to heavy use of jargon. This got us interested in the difficulty of user comments, and whether technical language could potentially turn people off from reading the article.

- Science news is heavily debated in online spaces. Comments on r/science frequently critique science news article or paper, providing either positive or negative evaluations of their methods and/or conclusions. Because past research has shown that user comments in other domains can sway readers' opinions about articles, we wanted to test how much the valence of a comment affected perceptions of a research study's methods.

To investigate the effects of comments, we ran two studies. Our first study examined the effects of comment difficulty (did it use complex or plain language?) and valence (was it positive or negative toward the study's methods?) on readers' intention to read a science news article.

Study 1: Difficult Comments Can Dissuade Users from Reading Science News

Going into this first study, we had two competing hypotheses about what would happen if our participants read more difficult comments about a science news article. Our first was based on the easiness effect [3], a phenomenon where people who read easier science articles become overconfident in how well they understand them. We thought if reading an easy comment made someone overconfident in their knowledge about a topic, they might decide they already know everything worth knowing about it, and skip the article.

On the other hand, past research shows that just reading technically complex science writing can reduce people's interest in science as a whole [4]. Seeing a complex comment might make them think the article itself is beyond their ability to understand (or at least, not worth trudging through the jargon to do so). So, it may be that the more difficult comment would actually reduce people's willingness to read the article.

Finally, we generally expected that the more negative comments would reduce people's interest in reading the article. However, since past research has been mixed when determining how much impact user comments have on readers' opinions on an article, it was also possible there might be no effect.

To test these predictions, we mocked up four reddit comments (see the below figure for an example), varying along two dimensions. Two comments had plain language, while the other two contained technical jargon. Two comments made arguments against the article's conclusions, and two comments supported them. We also included a control condition, where the article was shown without any comments.

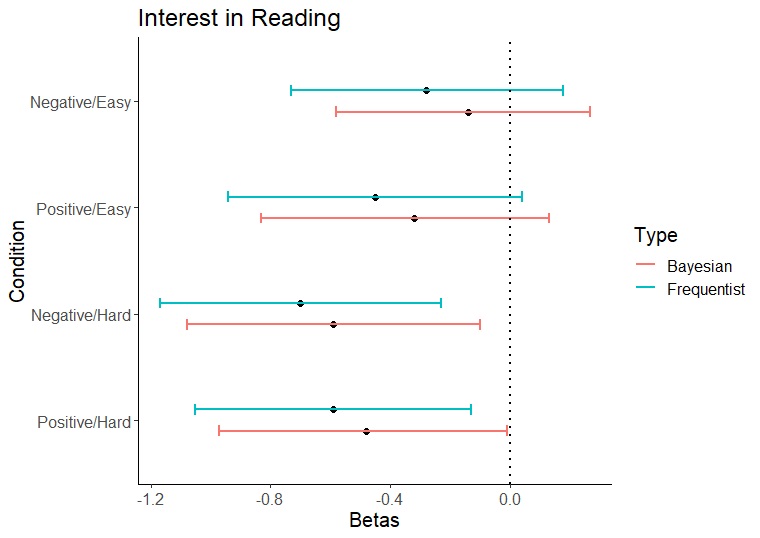

We recruited 298 participants from Amazon Mechanical Turk. We first showed them one of the 5 comment conditions, and then asked how likely they would be to read the associated article (on a 1-5 scale). For more information about our measures and analyses, check out the full paper. In short, we compared the effect of each comment against the control condition, to see whether they had a positive or negative effect on participants' likelihood to read the paper. We used Bayesian and frequentist methods to analyze the effects of each comment (see below figure). By using multiple approaches, we could be pretty confident that any effects we saw were robust if they achieved statistical significance under both frameworks.

Overall, we found that both the difficult comments reduced participants' interest in reading the article, but there was no overall effect of negative comments. This is interesting, but this data isn't enough to tell us why difficult comments had this effect. It's possible participants felt like the article itself might be more difficult, or perhaps the mere presence of difficult comments reduced their interest in science [4]. To dig in further, we ran another study, this time paying more attention to how comments affect readers' expectations about the article.

Study 2: User Comments Act as a Signal for the Article's Qualities

In Study 2, we hypothesized that the qualities of a comment might serve as signal for the qualities of their associated articles. For example, if people read a difficult comment, we believed they would expect the article itself is similarly difficult, which would in turn reduce their willingness to read it. This kind of heuristic effect could explain our results in Study 1. To test it, we needed to collect more data about participants' expectations of the article itself.

Study 2 was similar to Study 1, but with a few key differences. First, since we didn't find an effect of valence, we decided to remove it as a variable. Instead, we decided to examine information quality (that is, whether the comment contained useful information) and entertainment value (whether the comment was entertaining or not) as variables, both of these being important qualities for readers when selecting what news to read online [2].

Second, after participants read our comments, they answered a few questions about their expectations of the article. For example, "how difficult do you think it would be to understand the article?", or "How useful do you think the information in the article will be?""

Third, we included a few open-ended questions for participants to fill out, such as "Thinking back to when you first saw the Reddit snapshot, how did the information on the page (the headline, any comments you saw, etc.), affect what you thought about the article?"

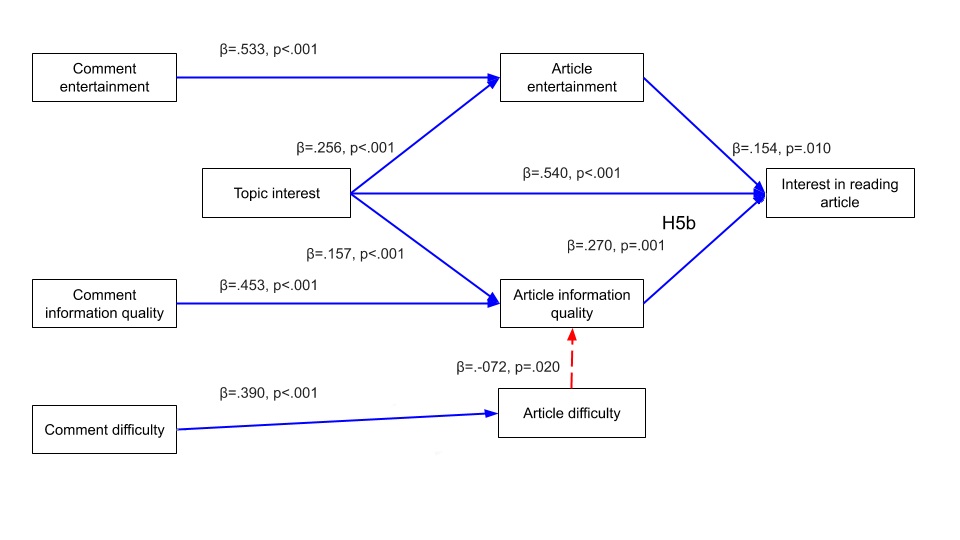

The full paper has much more detailed analyses, but our overall results are best summarized in the figure below. In general, we found that the qualities of the comments signalled that the article would share similar qualities. So, if participants read a hard comment, they expected the article to be harder. If the comment had more useful information, they expected the same from the article.

So what does this mean?

Based on these results, it looks like social media users generally expect the qualities of a science article to match up to the qualities of its comments. So, if an article is surrounded by high-quality, clear commentary, readers may expect more from it and decide to click through. On the other hand, if comments are (for example) too difficult (a known issue on r/science [1]), potential readers could be turned off from it.

This could be especially problematic for low-quality, inaccurate comments. If people read a poorly-written or unhelpful comment, they may choose not to read the actual article. In that case, their only impression of the article would be based on the comment itself, and they may come away with a negative impression of the underlying research. If poor comments discourage engagement with scientific articles, it is important for social media sites to self-moderate effectively and promote high-quality discussion.

Overall, this research shows that the user-generated comments surrounding a piece of science news can color readers' expectations about the article they're discussing, affecting their impressions and helping them decide whether or not to read it. So, next time you leave a comment about a science news story, remember that you may be providing a kind of advertisement for the article itself, and write accordingly!

References

[1] Jones, R., Colusso, L., Reinecke, K., & Hsieh, G. (2019, May). r/science: Challenges and opportunities in online science communication. In Proceedings of the 2019 CHI conference on human factors in computing systems (pp. 1-14).

[2] O'Brien, H., Freund, L., & Westman, S. (2013). What motivates the online news browser? News item selection in a social information seeking scenario.

[3] Scharrer, L., Rupieper, Y., Stadtler, M., & Bromme, R. (2017). When science becomes too easy: Science popularization inclines laypeople to underrate their dependence on experts. Public Understanding of Science, 26(8), 1003-1018.

[4] Shulman, H. C., Dixon, G. N., Bullock, O. M., & Colón Amill, D. (2020). The effects of jargon on processing fluency, self-perceptions, and scientific engagement. Journal of Language and Social Psychology, 39(5-6), 579-597.